So I’m getting some property out in the countryside. Here is my video about what to look for when shopping for a rural property. Here is the link to my checklist. Its an open office document.

Author Archives: cagney

Story Time

Scope creep is the expansion of your project goals. When I started I had no education or experience in robotics. Some would argue I still don’t. But I had a few personal goals in 2016. One of those was to pursue my goal of learning about robotics. It all stared with the realization that maybe […]

Advanced RL

This post will organize some ideas and the topology of advanced reinforcement learning. Once you understand the investment needed for current ML solution it becomes apparent how important this is. The problem with so many deployed ML solutions is fragility and data consumption. These problems have been known and a variety of solution explored. The […]

Machine Learning RoadMap with DL4J

I have been working with DL4J like crazy and figured I would leave a post up about it. Here is a github with the example projects. https://github.com/cagneymoreau/RoadMap I created the Git because I had all these small projects that are sequential and nature and thought. Might as well post them in case someone else is […]

Video Streaming Update 2020 – Robotic Vision

Since my old video streaming tutorial get a lot of view I wanted to add some new info and an introduction. If you are building a robot and need to stream video this is for you! Why android to desktop -Android The android device I use is an old old v21 phone. I can […]

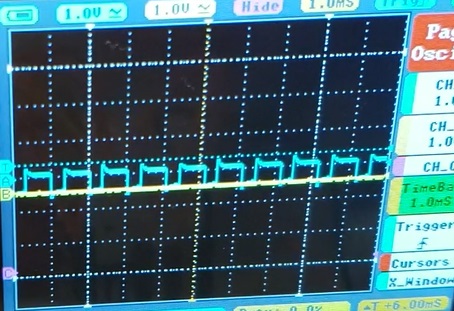

0-10 to 5 pwm

I purchased a diode laser from amazon. Bigger than my older laser. Has pwm cause im fancy. But i needed 5v pwm and my mach3/breakout board provides 0-10v analog. The easiest solution I could think of was once again to slap a nano on it. Here I am testing the circuit. The circuit uses 2 […]

Casting aluminum a bit more

I have had some more practice with casting aluminum and now I have even upgraded my oven. My original ovens electrical plan did not account for wire durability based on wattage and length of wire so it quickly wore out. I also attached an arduino nano to the oven so I could quickly enter rising […]

3d printing

So 3d printing has made me pretty lazy. I really hate milling aluminum when I can just 3d print. I have 3 printer right now. 2 FDM and 1 SLA. I never use the sla tbh. I bought the ender 3 printer and upgraded them with new firmware, raspberry pi an auto level and a […]

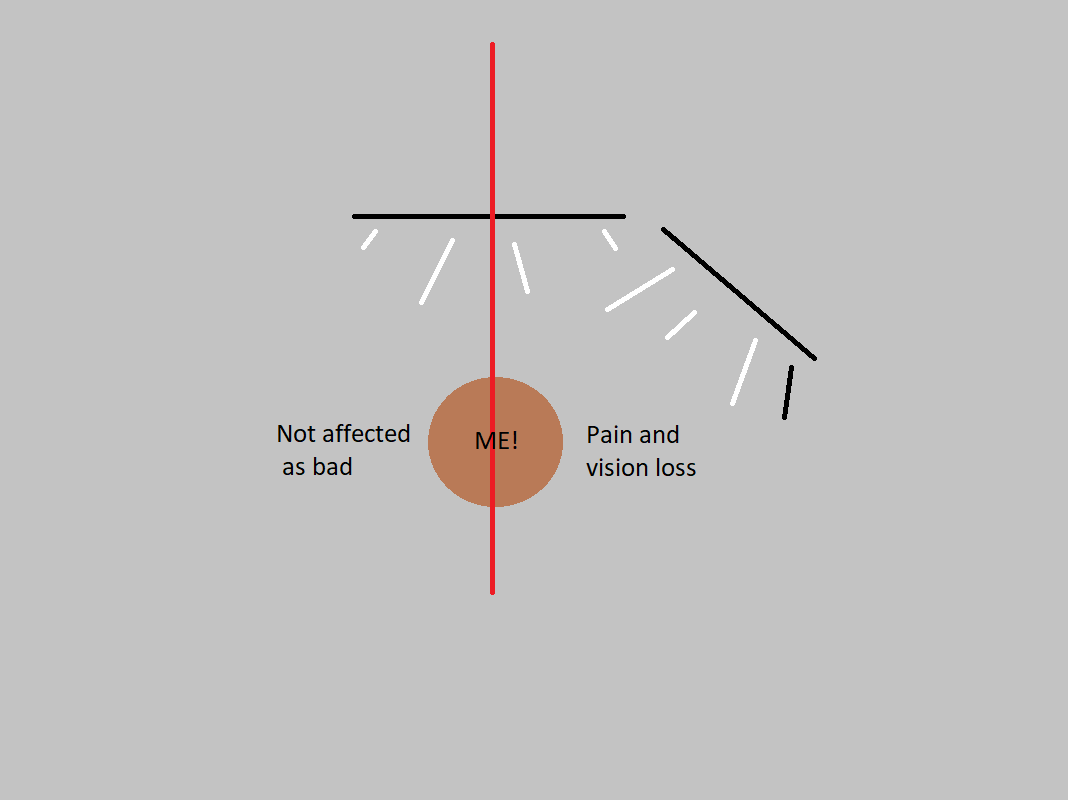

Computer Screens Cause Blindness

If you didn’t know count yourself lucky to find out now.I was dumbfounded and angry when I found out. I had unknowingly subjected myself to an experiment and lost some vision in my right eye as a result! Below is my computer set up with two screens. After about a year of this my right […]

Toyota Tundra Backup Camera

Vehicle back up cams are kind of a pain to install. this was no exception but the results are nice. I installed this in my 09 tundra. With this kit from amazon. Please use link as it help support my website. Open and in reverse. When its closed. Backside The kits only […]