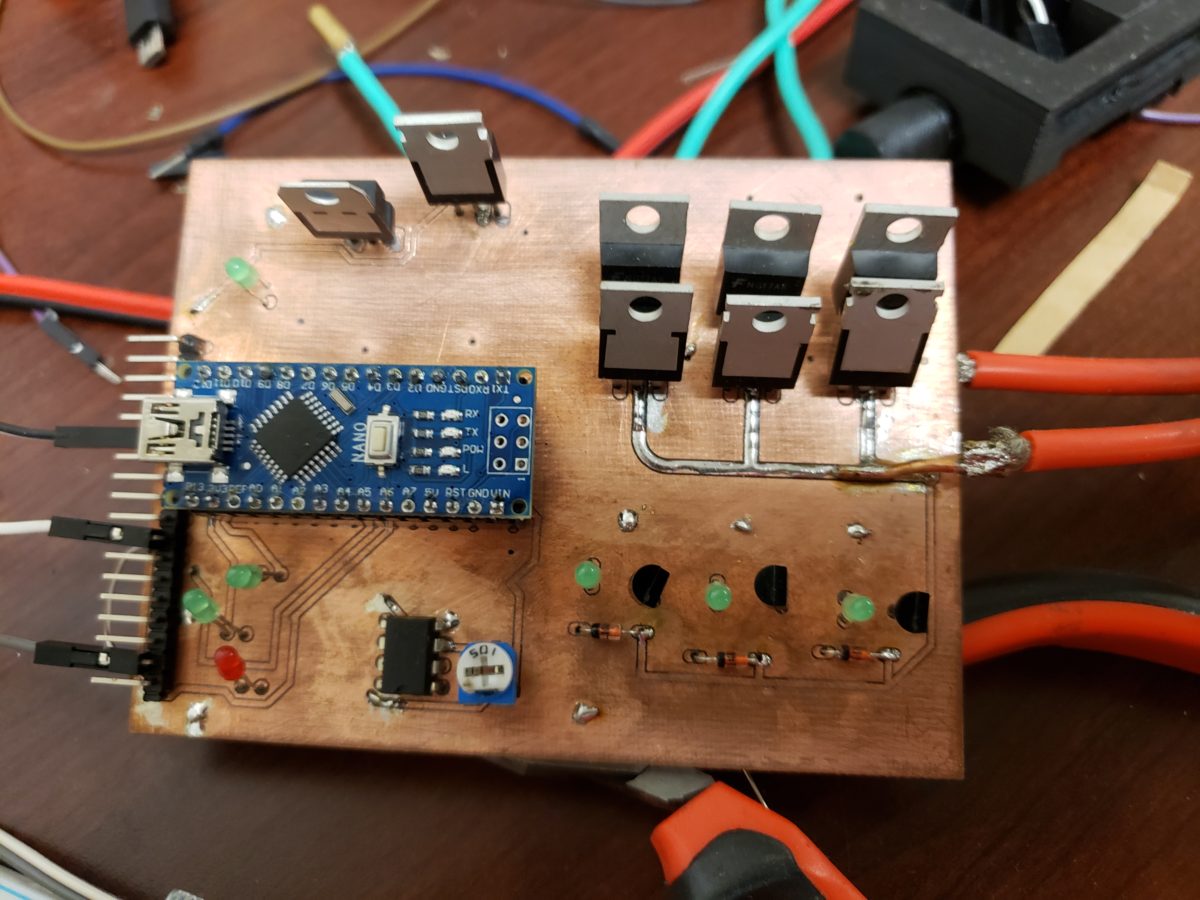

Every project I take on teaches me something new. Oddly enough its never humility. My recent design of an electronic speed controller for a brush less motor was a long and winding road in understanding how a motor could be controlled. The why is simple. If you want to build a robot there are zero…I […]

Author Archives: cagney

Gear Design

1. Types of gears 2. Gear arrangements 3. Considerations – Strength, speed, friction/noise/vibration 4. Layout Calculations 5. Hypocycloid 6. Differential planet 1) Types of gears When looking at involute gears there are several categories of this type. Gear arrangements and types of gears are often used interchangeably but in my mind they are different. […]

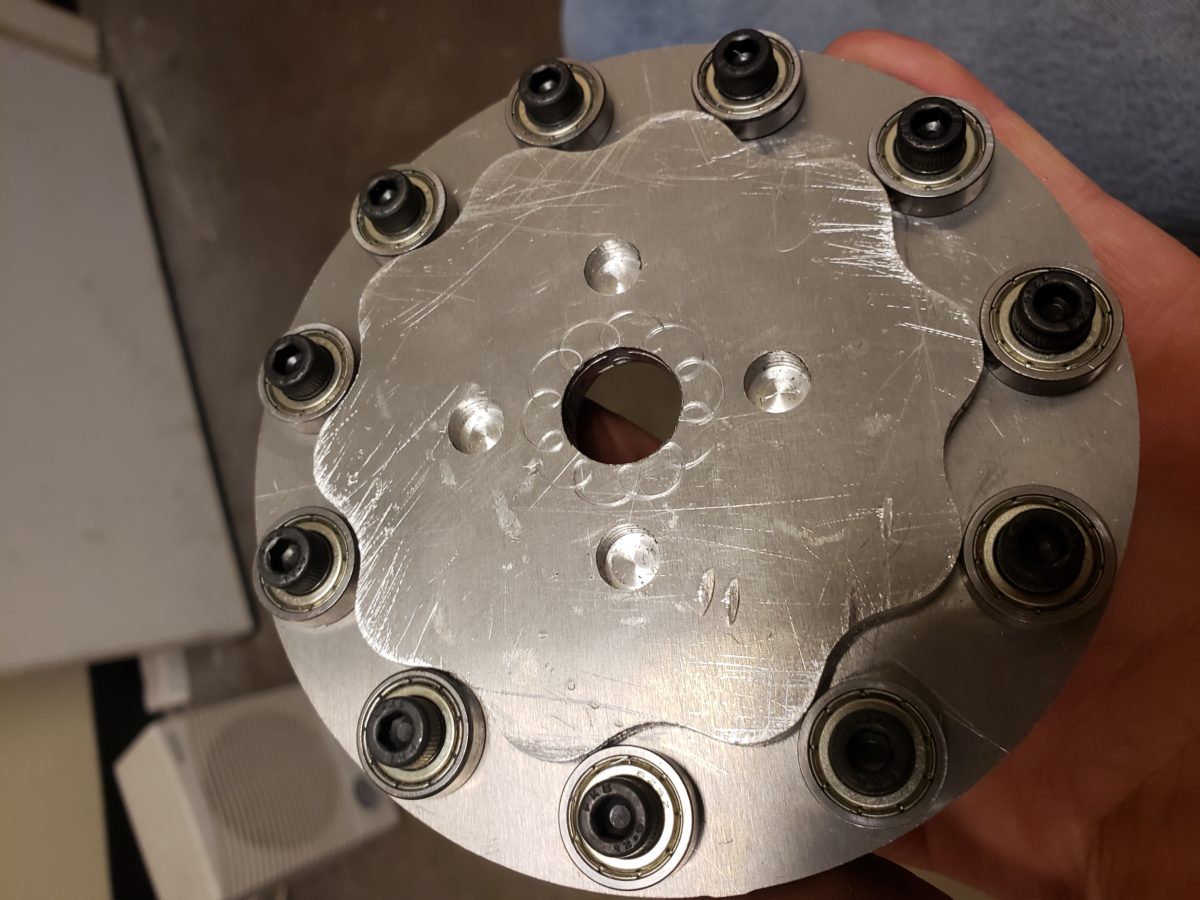

The Struggle of Custom Parts

Custom parts are hard to come by. Off the shelf parts are literally forming a trash island in the ocean. This is my dive into making custom parts. I could have gone at it a few different ways but my limitations are as follows. Time – I don’t want to spend 10 hours milling a […]

System -> model -> model -> System

What is the difference between working hard and working smart? I attempt to define that here. A very long time ago I read a Richard Maybury book and it touched on how the brain is attempting to model how the world around it works. Being a curious person and always trying to sharpen my understanding […]

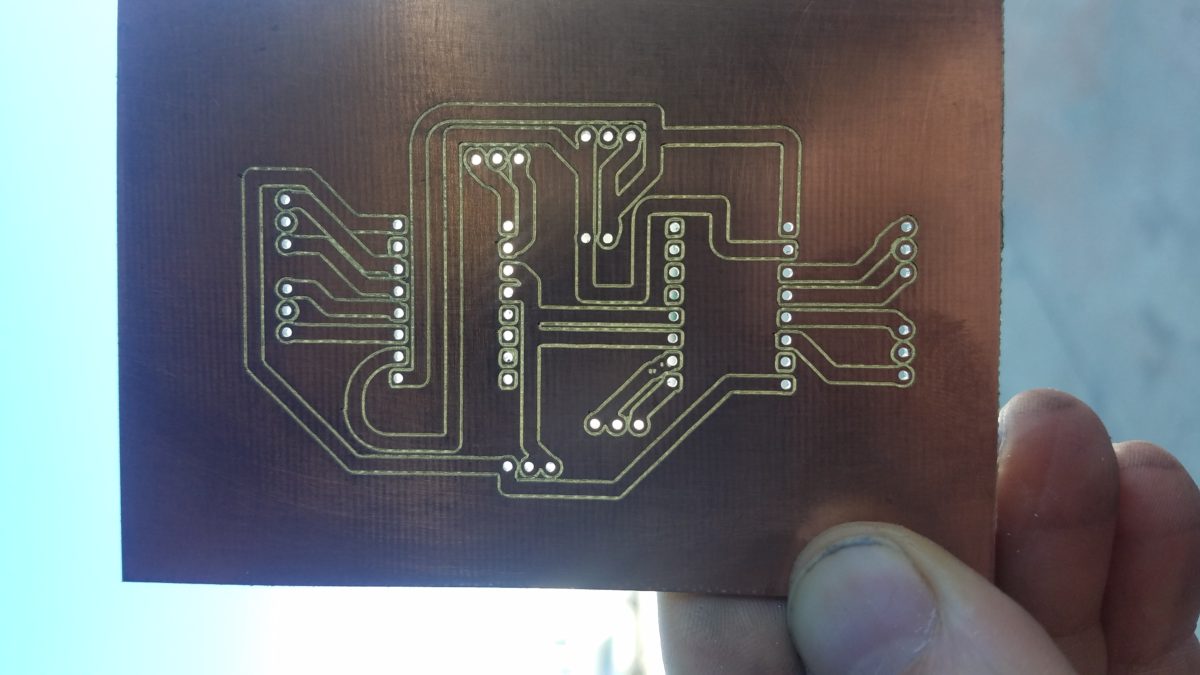

Milling a PCB

In order to better speed up my prototyping and in consideration of possibly small scale manufacturing I have begun milling my circuit board designs. This picture should give you a clear idea why this is so important. This is how myself, and many others, make circuit boards at home. By wrapping wire around the back […]

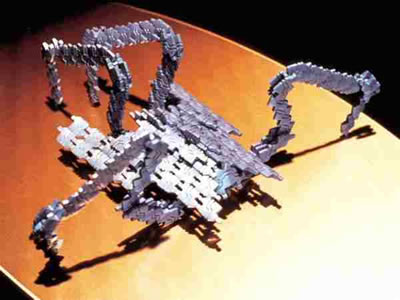

Considerations on building a robot

Why… One of my childhood interests has always been robotics. I think most people have at least somewhat of a fascination with robots when they are young. Over the last several years when I was working I began to realize that robots in construction are really underdeveloped. The reason I believe this is because I […]

Android data analysis and error handling

The featured picture for this post is actually a cnc project where my end mill broke moments before completing. But if you’re an android developer you probably have had the little gear icon in your email inbox or more recently emails from fabric. Scroll down to the bold title to skip my rambling musings. These […]

Stream Video From Android Part 8 – Tips, Tricks and Tests

Want a little bit more? You got it. A bit of backstory on my experience. I wrote these blog posts because it seemed there are reference manuals written by experts for experts with no bridge for the beginner to cross relating to these subjects. This article plus the resources I mentioned in the first post […]

Stream Video From Android Part 7 – Depacketize and Display

Getting those packets onto a screen. There will be one more post after this talking about some extra classes and techniques I used to get this done. So if I gloss over something here make sure and check there to get your codes straight. -> Get the videodecoder code here <- -> Get the imagedecoder […]

Stream Video From Android Part 6 – Packetize RTP

I know, you deserve a nap but please hang in there. The file I’m referencing is in the last post if you need it. Also MAJOR WARNING HERE!!! I did not test my rtp packet code against another software. There may be errors because I simply wrote what seemed to make sense and then wrote […]