2020 update Where I go over better methods on android and give some quick ffmpeg tips I’m not sure how you got here, but if you need to stream or dissect video this might be your lucky day. Whenever I write a new article my biggest joy is choosing an image to represent […]

Category Archives: software

Android Swipe Functionality

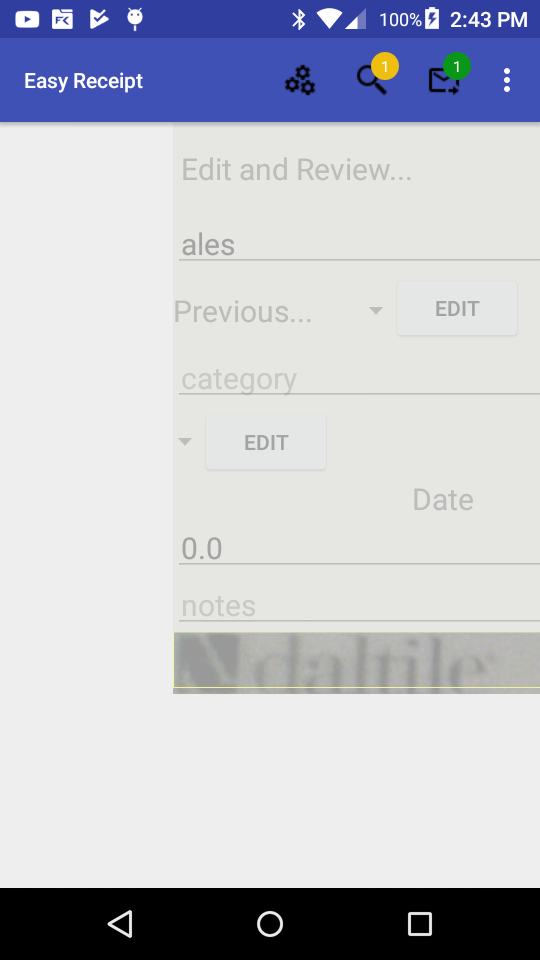

I needed to allow my easy receipt app to do a few simple things. -A way for the user to review the receipts that was swipe-able. -This view needed to show data and an image -It needed left right detection as well The basic components for this are as follows; Layouts- I used two […]

Android Camera2 Api

If you are building an app with the android camera2 api here are my thoughts after fighting with it. (Full code at bottom for copy paste junkies) This api was a little verbose with me using about 1200 lines of code. It could probably be done easier but if you want something custom here is […]

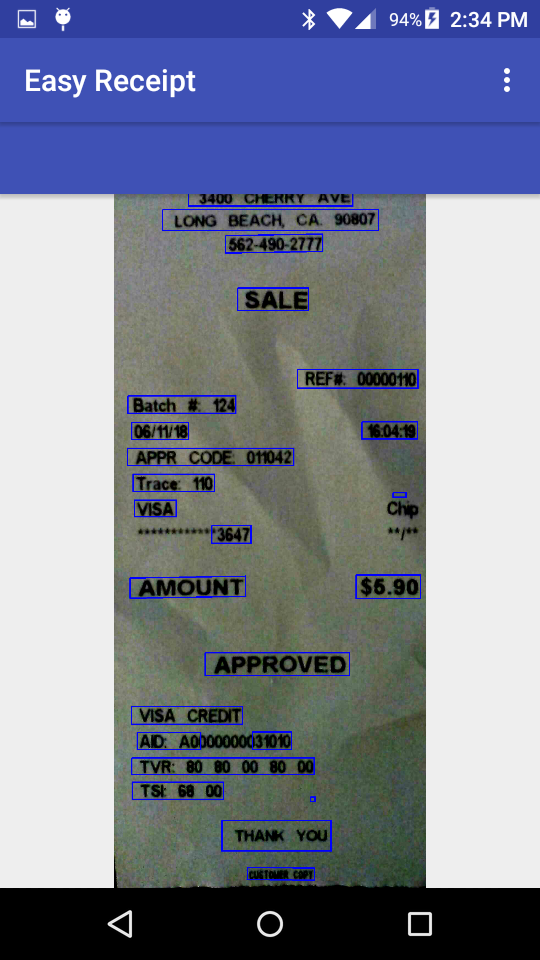

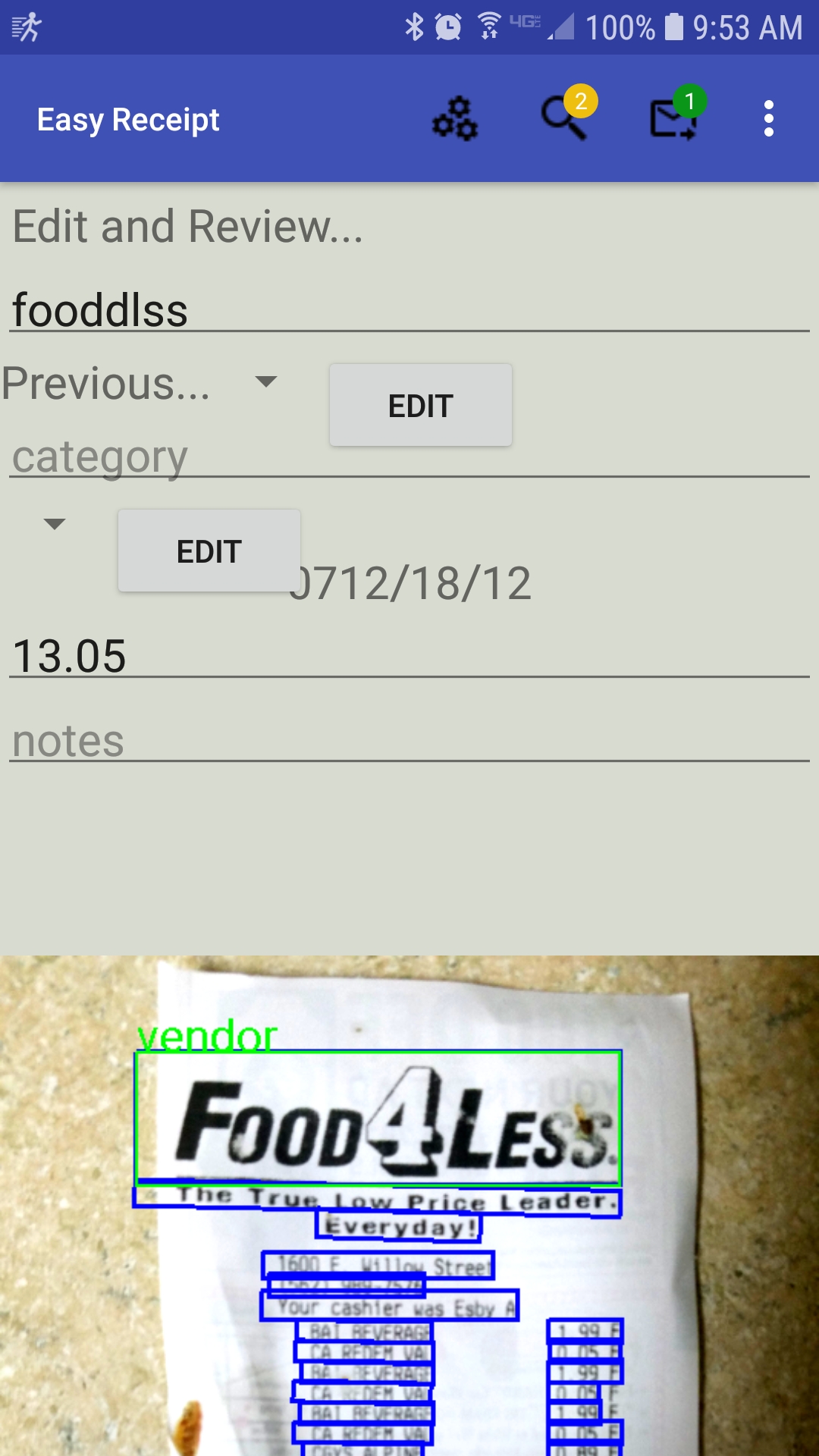

Working with Google Vision API on Android

Thanks to googles text recognition API I was able to effortlessly get text from the receipts I wanted to parse. In the picture above you can see I created a method I could pass images into which returned results through a callback. The strategy was simple. Here is the link I used to set […]

Easy Receipt

Easy receipts is an app I made that allows you to easily organize your receipts. Any one who has had to track their receipts knows the labors of carrying a folder around and then manually entering them into a computer and probably scanning them as well. This app is CONVENIENT. Just take a picture […]

Electric Skate Board

electric skateboard Describing my electric skateboard build. I have only driven this board seven miles so I cant say how far it will go. But I can say that its scary fast and very fun! Electrical was a cinch! Below you can see the layout of the parts that are needed for the electronics. […]

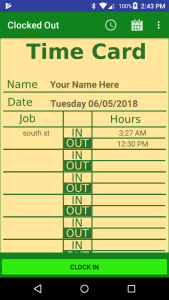

Updating the easy time card app

easy time card app android Updated and revised After releasing the easy time card app I realized that it looked very dull and relied heavily on androids basic graphics. It was a utility app I made for myself so early on this was ok. But after releasing it I wanted it to look […]

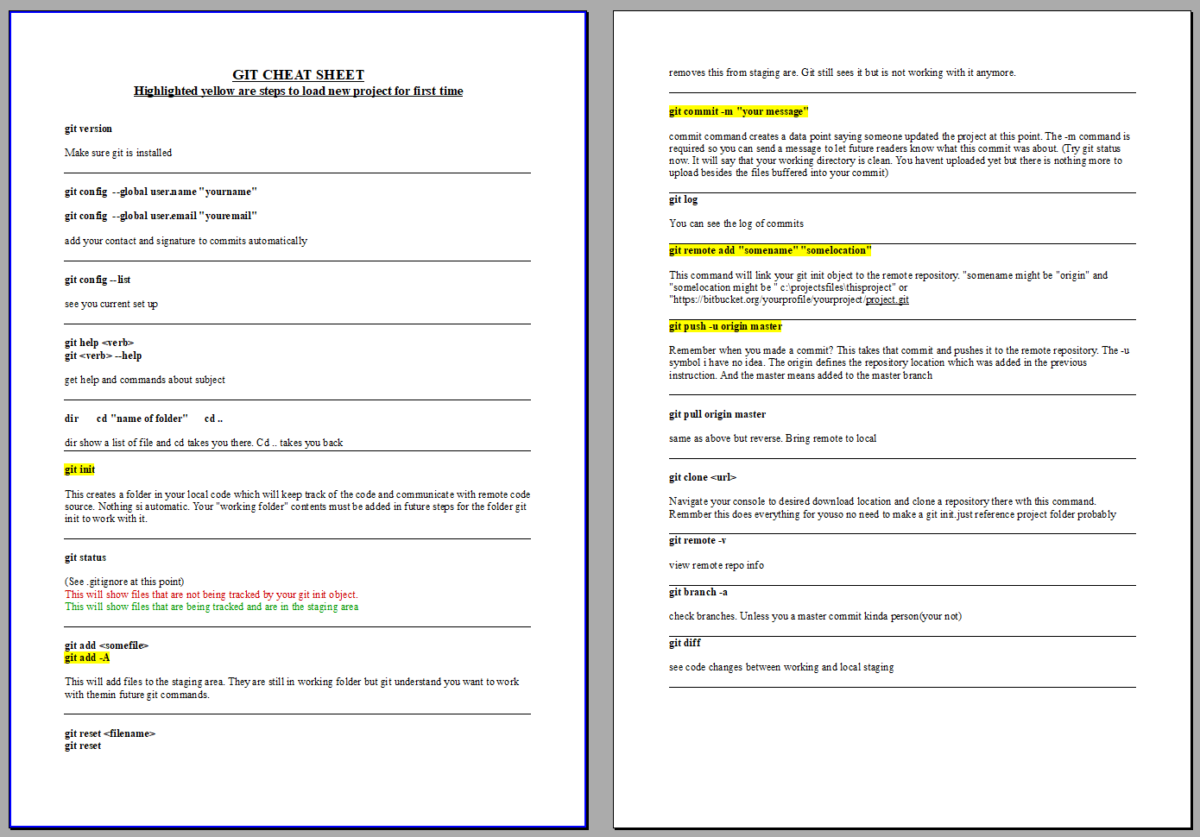

Git Hub Tutorial / Cheat Sheet

git tutorial / cheat sheet Using Git was a bit of a headache the first time around. I remember day 1 complaining about it every 5 minutes. After a few days it becomes a trusted friend. Here is the tutorial I wish I found when learning to use it. Enjoy…and download the pdf […]

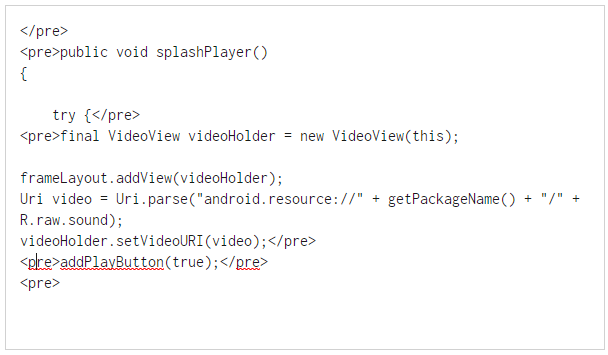

Adding a splash video player on Android

On my app I wanted to include an introductory video loaded directly ino the app instead of pulled form an internet source. Once the app was created I used a resource called handbrake which compressed my video into just a few megs. The I applied the following code… My main activity has two […]

Building a pdf programmatically on Android

In my recent project I needed to build a pdf and email it to a user. Android has a great library for this and I found it only took a few hours to get a reasonably readable pdf onto my screen. The basic outline goes as follows //create the pdf <pre>PdfDocument reportDocument = new […]